Large language models (LLMs), which are also effective syntax engines, tend to “hallucinate” (i.e., invent outputs from parts of their training data). However, the unfocused results may also be fun, although not always factually grounded. RAG provides a solution to “ground” solutions within a specific context of content material. Also, rather than costly retraining or fine-tuning for an LLM, this strategy permits for fast knowledge updates at low value. Focus on the first papers “REALM: Retrieval-Augmented Language Model Pre-Training” by Kelvin Guu, et al. at Google, and “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks” by Patrick Lewis et al. at Facebook—both published in 2020.Unbundling the Graph

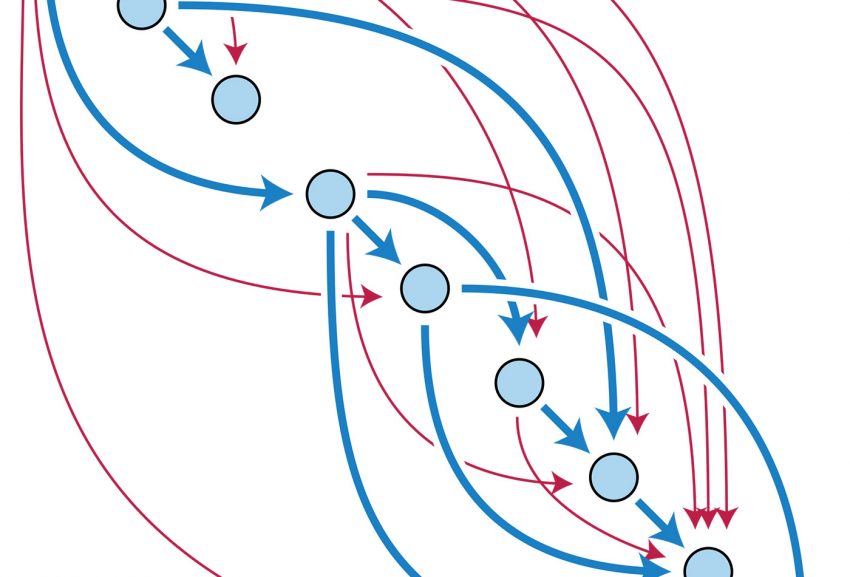

Here’s a easy tough sketch of RAG:

Learn sooner. Dig deeper. See farther.

Start with a group of paperwork a couple of area.

Split every doc into chunks. Unbundling the Graph

Every chunk of textual data is processed through an embedding model to generate a vector representing it.

Embed these chunks in a vector database, ordered by the embedding vectors.

If and when a query will be asked, input its text to the same embedding model as done above, partition the input text to obtain nearest neighbors and present these partitions into an ordered list to the LLM and derive a response. Although the overall course may be (a bit) harder in reality, that’s the idea.

The different taste ranges of RAG are inspired by the practices of recommender systems, such as vector databases and embeddings. Large-scale manufacturing recommenders, serps, and other discovery systems have also-for a long time-benefited from the use of information graphs as exhibited in Amazon, Alphabet, Microsoft, LinkedIn, eBay, Pinterest, etc.Unbundling the Graph.

What is GraphRAG?

Graph applied sciences assist reveal nonintuitive connections inside knowledge. For instance, articles about former US Vice President Al Gore may not talk about actor Tommy Lee Jones, though the 2 have been roommates at Harvard and began a rustic band collectively. Graphs permit for searches throughout a number of hops—that’s, the flexibility to discover neighboring ideas recursively—similar to figuring out hyperlinks between Gore and Jones.

Retrieval-Augmented Generation using Language Model (LLM) with Knowledge Graph (KG),” and an excellent recent survey paper, ”GRAG: A Survey” Boci Peng, et al.

However, the “graph” part of GraphRAG refers to a multiplicity of different things—perhaps an equally important consideration in this, but by no means the only one. One solution to construct a graph to make use of is to attach every textual content chunk within the vector retailer with its neighbors. Distance” between each pair of neighbours can be regarded as likelihood. When a query is presented immediately, perform graph algorithms to walk the probabilistic graph, and forward a ranked index of the produced chunks to LLM. This is a part of how the Microsoft GraphRAG strategy works.

An alternative approach exploits a subgraph of related area data, the place nodes in the subgraph represent concepts and point to textual chunks in the vector store. When a immediate arrives, convert it right into a graph question, then take nodes from the question outcome and feed their string representations together with associated chunks to the LLM.

Taking another step forward, certain GraphRAG methods use a lexical graph constructed by parsing the chunks to extract entities and relations from the textual content, and this is exploited to build a sub area graph. Treat an incoming immediate as a graph question and select the resulting set to choose chunks for the LLM. Good examples are described within the GraphRAG Manifesto by Philip Rathle at Neo4j.